Table of Contents

What are AI Hallucinations? Signs, Risks, & Prevention

AI hallucinations pose a growing challenge for enterprise teams exploring generative AI. These errors can introduce false or misleading information into outputs — and often, the content appears entirely confident and convincing.

In one study evaluating the legal use of AI, hallucination rates ranged from 69% to 88% when responding to specific legal queries using state-of-the-art language models.

That’s a serious risk for organizations across sectors. Hallucinated content can misinform employees or customers, support poor decision-making, and even cause reputational harm — or real-world consequences — in high-stakes domains like healthcare and finance. In fact, some LLM providers have already faced lawsuits tied to false or defamatory content generated by their systems.

If your organization is exploring generative AI, it’s critical to recognize the signs of hallucination, assess the risks specific to your use case, and put guardrails in place. This article will show you how.

What are AI hallucinations?

AI hallucinations occur when a model generates incorrect or misleading outputs — content that may appear fact-based or coherent but is ultimately inaccurate or nonsensical.

While hallucination is the standard term in AI, it’s sometimes discussed alongside concepts like confabulation, fabrication, or creative guessing. These aren’t technically the same — for instance, confabulation comes from psychology and refers to unintentionally filling memory gaps — but all attempt to describe the same underlying behavior: producing information that sounds plausible but isn’t grounded in fact.

AI hallucinations can take many forms: confidently citing research that doesn’t exist, inventing sources or statistics, or offering a “best guess” framed as a factual statement.

These errors happen because large language models generate responses based on statistical patterns in data — not from a humanlike understanding of facts, context, or the world itself.

How do AI hallucinations occur?

AI hallucinations are a side effect of how large language models (LLMs) are built.

Despite sounding intelligent, LLMs aren’t grounded in real-world knowledge. They’re designed to predict the next word in a sequence based on patterns in their training data — not to understand facts the way humans do.

Some models are trained on narrow, domain-specific data; others on vast, general-purpose datasets. Broadly trained models — especially those using open web data — tend to hallucinate more often. In contrast, task-specific models that have been fine-tuned with curated data and reinforced through feedback loops typically perform better, though they’re not immune. Even hybrid approaches like retrieval-augmented generation (RAG) — which supplement responses with information from trusted sources — can still produce hallucinated content.

Because LLMs are optimized to generate fluent, coherent language — not to verify facts — they can’t be relied upon to consistently deliver truth. Their strength lies in creativity and expressiveness, but that same creativity can result in wildly implausible or entirely false outputs, confidently delivered.

This makes LLMs well-suited for applications like conversational interfaces or brainstorming, where style and engagement matter more than precision. But in high-stakes environments where accuracy is critical, the model’s tendency to guess, fill in gaps, or prioritize what sounds right over what is right becomes a serious risk.

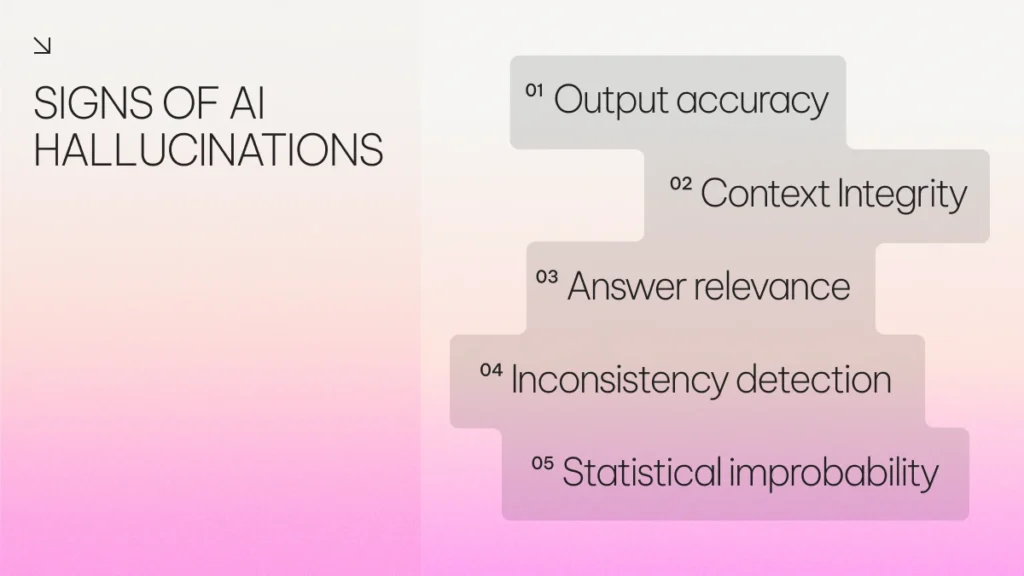

Signs of AI hallucinations

Hallucinations are likely to occur in any AI system, especially those relying on large language models. That’s why it’s critical to implement robust quality assessment processes and guardrails. Enterprises need a clear understanding of how, where, and how often hallucinations appear, so they can recognize the warning signs early.

Below are the key categories of hallucination to monitor, along with typical signs to watch for:

Output accuracy

This occurs when a model provides information that is not supported by the prompt or source material — even if it sounds correct on the surface.

Signs include:

- Generating information that isn’t present in the provided context

- Confidently presenting inaccurate or fabricated content

- Answering with plausible but false information, despite lacking sufficient context

- Offering nonsensical or incoherent answers when context is missing

- Inventing sources, citations, or specific details

- Contradicting known or supplied information

Context Integrity

Context integrity failures happen when the model generates responses as if they’re derived from the source material — but a review shows no such information exists. When challenged, the model may deny inventing content or acknowledge the mistake yet still repeat the claim.

Signs include:

- Referring to statements as if they appear in the context — e.g., “as stated above” — when they do not

- Adding unsupported facts without being prompted

- Attempting to answer without requesting necessary context or clarification

- Responding with certainty, then deflecting or looping when asked to verify the source or explanation

Answer relevance

Hallucinations in relevance occur when the model’s response is factually correct but not appropriately focused on the question asked. The information may be real, but its inclusion is unwarranted or tangential.

Signs include:

- Including unnecessary elaborations or excessive detail

- Shifting topics or introducing unrelated information

- Ignoring specific instructions or constraints

- Generating filler content that doesn’t serve the original query

Inconsistency detection

LLMs lack a consistent internal model of truth, which makes them prone to contradictions — especially in longer, multi-part prompts.

Signs include:

- Self-contradictions within a single response

- Providing different answers to the same question during a session

- Making conflicting claims without acknowledging the contradiction

- Justifying contradictions with invented or unrelated claims

Statistical improbability

Because LLMs generate content based on linguistic likelihood — not real-world probability — they can fabricate or distort numerical data, especially when dealing with outliers or rare events.

Signs include:

- Presenting data, percentages, or studies that sound overly optimistic or suspiciously precise

- Producing statistically improbable outcomes with full confidence

- Offering plausible-sounding figures or claims not grounded in the provided context

- Sharing historically inaccurate or anachronistic information

Specific risks of AI hallucinations in the enterprise

LLMs are known to struggle with complex tasks that require nuance, contextual reasoning, and factual precision — particularly when historical understanding or domain-specific expertise is involved.

While hallucinations may be inconvenient in personal or low-stakes use cases, in an enterprise setting, they can lead to serious operational, legal, reputational, or even safety-related consequences. Below are examples of how these risks manifest across key industries:

Fabricated citations in research

In enterprise research — whether for academic, scientific, or business purposes — hallucinated citations can have damaging ripple effects. One study analyzing medical research proposals found that out of 178 references generated by GPT-3, 69 contained incorrect or nonexistent digital object identifiers (DOIs), and an additional 28 could not be located via search, indicating fabrication.

These kinds of errors can result in:

- False or unverifiable claims being published and disseminated

- The inclusion of fictitious authors or journals

- The propagation of unsupported or inaccurate conclusions

In sectors like pharmaceuticals, medical devices, or legal services, such misinformation could have regulatory and reputational consequences.

Misleading data use in AI-powered medical diagnostics

In clinical applications, factual accuracy is non-negotiable. Hallucinations in diagnostic tools, medical summaries, or treatment guidance can introduce ethically unacceptable risks.

Examples include:

- Misrepresentation of clinical study results

- Inaccurate claims about drug efficacy or treatment options

- Hallucinated advice during AI-powered patient interactions

In healthcare, hallucinations don’t just erode trust — they could contribute to real-world harm or life-threatening outcomes.

Dialogue history-based hallucinations in retail support

In retail, customer trust hinges on accurate, consistent information. When AI-powered support tools misremember past interactions or fabricate dialogue history, the consequences can range from frustration to reputational damage.

Risks include:

- Providing incorrect order histories or fabricated prior commitments

- Suggesting non-existent promotions or support policies

- Undermining the reliability of customer service automation

This undermines the customer experience and can escalate costs through increased human intervention and churn.

Fabricated dates or details in financial reporting

In finance, precision matters. A single hallucinated date, percentage, or economic indicator can have outsized consequences — whether in internal reporting, forecasting, or public communications.

Potential impacts include:

- Misstated historical events affecting forecasting models

- Incorrect metrics altering investment decisions

- Misalignment with actual reporting cycles or regulatory disclosures

Even small deviations from real-world financial data can lead to poor decision-making, regulatory scrutiny, or loss of stakeholder confidence.

How to prevent AI hallucinations

Some level of hallucination is to be expected when using large language models — but the key to responsible enterprise adoption is evaluating how closely the model’s outputs align with the data and context you provide.

Several strategies and tools can help improve AI output quality and reduce the risk of hallucinations. One of the most effective is implementing structured evaluation frameworks, supported by model tuning, grounding techniques, and human oversight.

Evaluation frameworks

Selecting an LLM is not just a technical decision — it’s a trust decision. Choosing the right model architecture, size, and context window is foundational, especially for use cases that require retaining and reasoning over information across longer interactions.

Evaluation frameworks allow organizations to systematically assess a model’s tendency to hallucinate and its ability to respond accurately within its context. These assessments typically focus on the following dimensions:

- Output Accuracy: Measures the model’s ability to provide correct answers when information is present — and to refrain from guessing when it is not. This includes tracking false positives (hallucinations) where the model confidently supplies incorrect information.

- Context Integrity: Assesses how the model handles questions that are unanswerable based on the provided input. High-performing models should acknowledge the lack of information rather than fabricate answers.

- Answer Relevance: Evaluates whether the model’s responses are focused, on-topic, and appropriate to the question asked — without introducing unnecessary or tangential information.

Train on enterprise content

For enterprise-specific tasks, Retrieval-Augmented Generation (RAG) is a powerful method to reduce hallucination. RAG works by giving the model access only to curated enterprise data — enabling it to generate answers grounded in known, trusted content rather than relying on general web training.

While effective, this approach can introduce overfitting risks, where the model becomes highly confident in its limited knowledge base but struggles with novel or ambiguous inputs.

A balanced approach includes training on a diverse and comprehensive set of enterprise content, combined with ongoing evaluation and domain-specific fine-tuning.

Use filters to detect hallucinations

Some tools and techniques can help flag potentially unreliable AI outputs before they’re used in real-world applications. Many AI systems assign internal scores to each word they generate — reflecting how likely that word is, based on patterns learned during training.

By analyzing these likelihood scores, organizations can apply filters or thresholds to identify low-confidence responses that may be more prone to hallucination. These filters can act as early warning signs, helping reduce the risk of made-up or misleading information reaching end users.

That said, these detection methods aren’t foolproof. They provide useful signals, but they operate on probability rather than certainty. Keeping a human in the loop — someone who can review both the AI-generated content and any system flags — adds an important layer of oversight, particularly when accuracy is critical.

What’s next for AI hallucinations

While hallucinations remain a known limitation of today’s language models, progress is moving quickly. Advances in architecture, training techniques, and evaluation methods are already improving model reliability — and the development of dedicated tools to detect and reduce hallucinations continues to accelerate.

In the meantime, organizations are far from powerless. By improving the quality and balance of training data, grounding models in enterprise-specific content, and incorporating thoughtful human oversight, it’s possible to significantly reduce the impact of hallucinated responses.

As AI adoption grows, so does the industry’s ability to shape safer, more accurate, and more context-aware systems. The models may not be perfect yet — but with the right guardrails, the path forward is not just promising, but actionable.