Table of Contents

Enterprises aren’t short on AI ambition, but many are stuck at the starting line. 82% report data silos that block critical workflows, making it nearly impossible to build effective, scalable AI solutions. The result? Disconnected efforts, stalled pilots, and mounting frustration.

But some are breaking through. Organizations implementing private AI report 2.4x higher productivity and 3.3x more success scaling generative AI — not because they’re using more AI, but because they’re using it differently.

Private AI doesn’t just plug into existing systems; it’s built to respect enterprise data boundaries, reduce risk, and unlock speed at scale. But realizing its value means understanding more than just the “what.”

Here we break down private AI, how it works, the platforms powering it, and the strategies that separate AI leaders from laggards.

What is private AI?

Private AI is a security-first approach to deploying artificial intelligence that protects sensitive data throughout its entire lifecycle. It enables organizations to develop and deploy AI models while keeping raw data local, encrypted, or anonymized, ensuring that personal or proprietary information is minimally exposed or centralized.

Unlike public AI models that often centralize data in the cloud, private AI keeps sensitive information within an organization’s controlled environment. The organization maintains control, whether that data resides on internal servers, user devices, or inside encrypted containers.

This is made possible through privacy-preserving techniques such as:

- Federated learning: Trains AI models across multiple systems without transferring raw data.

- Differential privacy: Masks individual data points while preserving useful patterns.

- Homomorphic encryption: Enables computation on encrypted data.

- Trusted Execution Environments (TEEs): Protect data during processing using secure hardware zones.

In industries like healthcare, finance, and retail, where data privacy is a regulatory and reputational concern, private AI offers a way for enterprises to adopt intelligent systems without compromise, embedding data protection by design.

Why private AI matters for enterprises

Unlike public AI models that depend on centralized, shared datasets, private AI keeps enterprise data where it belongs — within your infrastructure, governed by your policies, and protected from third-party access. This approach allows enterprises to apply AI to sensitive, proprietary, or regulated information without compromising control or security.

For industries where compliance and data privacy aren’t optional, private AI deployment supports alignment with regulations such as HIPAA, GDPR, and GLBA. It helps ensure that data remains protected whether processed, transmitted, or stored.

But the value goes beyond compliance. Private AI creates strategic differentiation. When organizations can securely tap into exclusive datasets — like customer histories, product telemetry, or supply chain operations — they unlock insights that general-purpose public models can’t match. This leads to automation tailored to internal processes, hyper-personalized customer experiences, and faster, data-driven innovation, without sacrificing privacy.

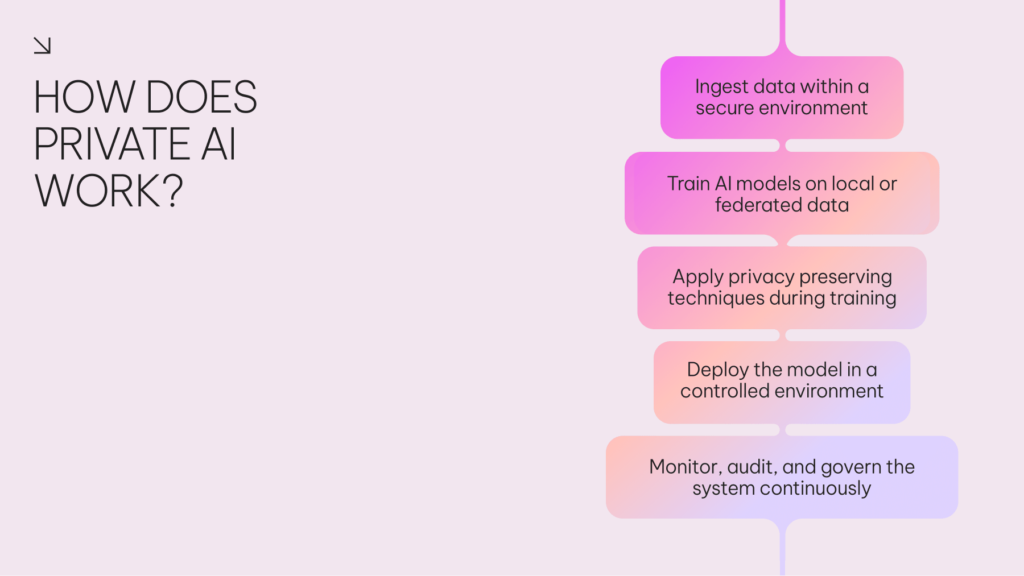

How does Private AI work?

Private AI enables enterprises to train, deploy, and monitor AI systems without compromising sensitive data. Here’s how the process works from start to finish:

1. Ingest data within a secure environment

Private AI begins with data ingestion and preprocessing, but unlike traditional approaches, all operations happen within the organization’s infrastructure. This could be an on-premises data center, a Virtual Private Cloud (VPC), or a secure Kubernetes-based environment like Amazon EKS. The goal: maintain complete control over sensitive records while enabling model access to relevant training data.

2. Train AI models on local or federated data

Once the data is curated, models are trained directly on enterprise datasets, either in a centralized location or using federated learning for decentralized sources.

Federated learning allows models to learn patterns across multiple endpoints (e.g., branches, edge devices) without moving the raw data, ensuring data residency and confidentiality. However, many enterprises opt for fine-tuning open-weight models locally, which AI21 supports through download-ready versions of Jamba models and custom private enterprise deployments.

3. Apply privacy-preserving techniques during training

During training, private AI incorporates techniques like differential privacy (which adds statistical noise to obscure individual data points) and encryption-in-use (which protects data even while it’s being processed). These safeguards reduce risk without significantly degrading model performance.

4. Deploy the model in a controlled environment

Once training is complete, the model is deployed in a production-grade, secured environment — typically a private cloud (VPC), on-premises stack, or hybrid infrastructure. Deployment environments are configured to align with internal policies and external regulatory requirements.

5. Monitor, audit, and govern the system continuously

Post-deployment, private AI systems are actively monitored to support ongoing compliance, model performance, and risk management. Enterprise-grade solutions support:

- Detailed usage and access logging.

- Built-in audit-readiness features.

- Controlled update pipelines for model refinement.

- Lifecycle governance across retraining cycles.

This ensures that AI systems remain accountable, traceable, and secure — even as business needs change.

What are common enterprise use cases for private AI?

Private AI allows organizations to harness data-driven innovation without compromising privacy, trust, or compliance. Here are some specific use cases for highly-regulated verticals:

Healthcare: Better outcomes without breaching trust

Healthcare providers must comply with data protection regulations like HIPAA while relying on sensitive patient data for diagnostics, treatment optimization, and research. Private AI offers a secure foundation for advancing care without data leakage.

- Collaborative diagnostics: Hospitals use federated learning to train shared AI models for disease detection (such as cancer or COVID-19 outcomes) without exchanging patient records. Each hospital trains locally, contributing only encrypted model updates.

- Secure research environments: Medical research centers run AI models inside private containers or VPCs, enabling experimentation on anonymized or synthetic data sets without external data exposure.

- Encrypted clinical trials: Pharmaceutical companies aggregate trial data from multiple hospitals using techniques like homomorphic encryption or secure multi-party computation (SMPC), allowing analysis without compromising patient privacy.

Finance: Smarter systems, safer data

In finance, where data is both an asset and a liability, Private AI enables institutions to deploy intelligence while ensuring strict compliance with regulations like GLBA and SOX.

- Fraud detection: Banks deploy models within their secure infrastructure to detect suspicious transactions in real time, with no external logging or data sharing. In some collaborative efforts, encrypted model updates are exchanged to improve detection across institutions.

- Credit scoring: Financial institutions leverage secure multi-party computation to compute risk assessments and credit scores using data across organizations, all while keeping customer records encrypted and siloed.

- Personalized services: Banking apps run lightweight AI models locally on a customer’s device to analyze spending behavior and suggest actions, with no cloud inference, minimizing risk and latency.

Retail: Personalized experiences, private data

Retailers increasingly rely on behavioral and purchase data to create highly personalized experiences, but must do so without undermining consumer trust.

- On-device personalization: Retail apps deliver real-time product recommendations using AI running locally in browsers or mobile apps. This avoids transmitting browsing histories or transaction logs to external servers.

- In-store analytics with privacy: Smart stores use edge-deployed AI to analyze foot traffic and optimize layouts or staffing, but retain all video and sensor data within on-premises infrastructure.

- Collaborative forecasting: Retailers and suppliers apply privacy-preserving analytics to jointly forecast demand from encrypted sales data, enabling inventory optimization without disclosing customer-specific or competitive information.

What platform and technologies support private AI at scale?

As enterprise adoption of private AI accelerates, organizations are increasingly turning to a mature, modular ecosystem of frameworks, libraries, and platforms that make privacy-preserving AI viable in production environments, not just research labs.

Rather than building from scratch, enterprises can combine open-source toolkits with commercial-grade solutions to achieve scalable, compliant, and secure deployments tailored to their industry and risk posture.

- Open-source frameworks such as PySyft (by OpenMined) and Flower provide accessible entry points for implementing federated learning, secure computation, and differential privacy. These tools are popular among research teams and enterprise pilots due to their active communities and compatibility with mainstream machine learning libraries like PyTorch and TensorFlow.

- For organizations that need enterprise-grade capabilities from day one, providers like AI21 offer production-ready, open-weight models that can be privately deployed within virtual private clouds (VPCs) or on-prem environments. AI21’s Jamba model family supports industry-leading long-context performance and low hallucination rates, with deployments available across trusted cloud platforms like Google Cloud Vertex AI, Azure AI, and NVIDIA NIM.

- For more structured, enterprise-grade deployments, platforms such as IBM Federated Learning and NVIDIA FLARE, are ideal for regulated industries like healthcare and finance. Microsoft SEAL is a leading library for homomorphic encryption, while Azure and Google Cloud provide confidential computing and differential privacy capabilities as part of their AI service portfolios.

- Specialist startups — including TripleBlind, Enveil, and Cape Privacy — focus on encrypted collaboration, privacy-preserving analytics, and secure model sharing across organizations, making them well-suited for cross-enterprise AI initiatives.

The result is a vibrant, modular ecosystem: enterprises can combine open-source components with commercial platforms, tailor deployments to their specific needs, and move from proof of concept to production — all while maintaining a focus on privacy, compliance, and scalability.

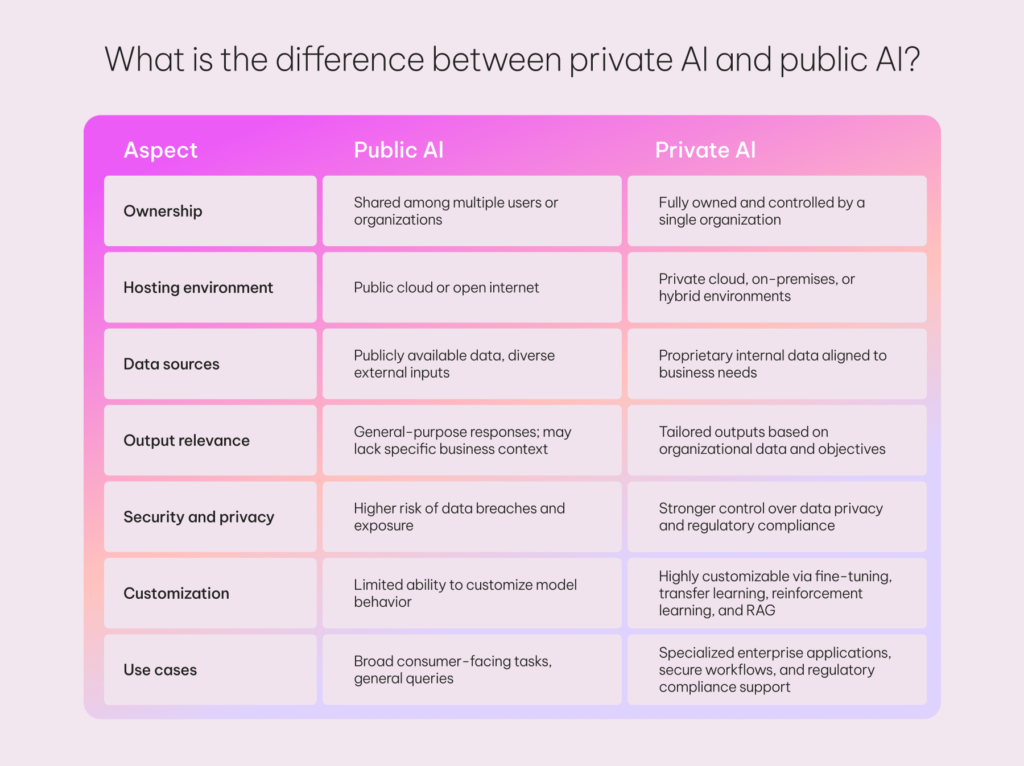

What is the difference between private AI and public AI?

Organizations exploring AI adoption must understand the key differences between private and public AI models, especially when managing sensitive data or operating in regulated environments.

- Public AI models are trained on large volumes of data from open web sources, and importantly, they often continue to learn from new user interactions. While powerful, they often lack context alignment for enterprise use and may introduce risks around data leakage, IP exposure, and security vulnerabilities due to shared infrastructure and limited customization. This ongoing cycle can introduce significant privacy risks, as inputs may be ingested into future training sets, potentially exposing sensitive information or intellectual property.

- Private AI, on the other hand, is deployed within an organization’s infrastructure — whether in a VPC, on-prem, or a containerized stack. These models are trained or fine-tuned on proprietary data and do not share information externally. They can be adapted using transfer learning or retrieval-augmented generation (RAG) to deliver domain-specific accuracy, regulatory compliance, and full data governance.

Public AI prioritizes accessibility, while private AI prioritizes trust, control, and customization, making it the preferred path for enterprises handling sensitive information.

What are the benefits of private AI?

Private AI offers several distinct advantages over public AI. Beyond enhanced data control and security, it can also deliver significant performance and cost benefits.

Full control

Private AI gives organizations full control over how models are built, deployed, and integrated into IT environments. This enables better alignment with internal systems, including legacy infrastructure, and allows continuous evaluation to prevent vulnerabilities. Because private models are trained on proprietary internal data, outputs are tailored to specific business needs while reducing the risk of exposing sensitive information or using competitor data.

Enhanced data security

With private AI, organizations retain full responsibility for data residency, access controls, and policy enforcement, without relying on third-party platforms. This improves protection against data leakage, minimizes the risk of inaccurate outputs (hallucinations), and ensures sensitive information stays internal. Hosting and managing the AI lifecycle internally also enhances visibility for audits and compliance tracking.

Regulatory compliance

Private deployments make it easier to comply with frameworks like HIPAA, GDPR, and GLBA by embedding enforcement directly into infrastructure. Enterprises can control where data is stored, processed, and transferred, which is critical for navigating multi-jurisdictional requirements.

Performance and efficiency

Running models locally — whether on-prem or in a private cloud — reduces latency, boosts throughput, and improves responsiveness. Teams can continuously optimize models for enterprise-specific workloads without relying on vendor roadmaps.

What are the challenges and limitations of implementing private AI?

While private AI offers clear benefits in control and security, it also presents unique challenges that organizations must navigate carefully, especially when moving from pilot to production.

IT infrastructure complexity

Integrating a private AI model with existing infrastructure can be complex. Each component must be compatible and secure to avoid operational disruption or exposure to cyber threats. Private AI can be deployed on-premises, in a private cloud, or as a hybrid of the two — the right choice depends on your current systems and regulatory needs.

Scalability constraints

Scaling private AI is less straightforward than using cloud-native services. Enterprises must plan for future growth, model retraining, and system upgrades — especially in deployments that lack elasticity or require custom configuration.

Internal expertise requirements

Deploying and managing private AI demands skilled IT, data, and security teams. Without in-house AIOps capabilities, organizations may face delays or inefficiencies, making external partners essential for implementation, model tuning, and lifecycle support.

Cost

Private AI typically involves a higher upfront investment for infrastructure and deployment. However, costs are often more predictable, with no fluctuating usage-based subscription fees. Because data remains internal, there are also no added costs for data transfers, supporting better budget planning.

Best practices for private AI deployment

Before adopting private AI, organizations should define clear objectives and map out the critical steps needed to achieve them. A structured approach helps ensure alignment across infrastructure, security, and strategic goals.

Assess IT infrastructure

Evaluate existing IT systems to confirm readiness for private AI deployment. This includes reviewing internal capabilities, identifying skill gaps, and flagging areas that may require external support or additional resources. Traditional infrastructure may need to be adapted, particularly to strengthen security and ensure seamless integration. Investments in high-performance computing (HPC) and secure networking may be needed for production-grade workloads.

Plan for ongoing evolution

Private AI environments must be built with future growth in mind. Select software and hardware that align with long-term needs and compliance obligations. Effective planning should address each phase of the deployment lifecycle, including realistic financial assessments for initial and ongoing investments.

Establish governance and security policies

Define clear policies for data governance, model access, and auditability. Ensure all training data is pre-validated and of high quality. Align your security posture with frameworks like NIST AI Risk Management or SOC 2, and deploy within architectures that support data residency, encryption, and role-based access controls.

Private AI is a strategic necessity for enterprises and large organizations. With 58% of employees regularly using AI tools at work, often beyond IT’s oversight, the urgency for secure, governed AI systems is only growing.

As enterprises seek to harness generative AI without compromising compliance, security, or control, private AI offers a focused, scalable path forward. It empowers organizations to align AI with internal data policies, industry regulations, and operational goals, without surrendering sensitive information to public models.

However, realizing the full value of private AI demands more than interest; it requires deliberate execution. Success hinges on infrastructure readiness, upskilling teams, and embedding AI into secure, well-governed workflows.

Enterprises that move early and thoughtfully will not only reduce risk, but they’ll also gain a lasting edge in performance, agility, and innovation.