Table of Contents

Transforming the Future of AI with Long Context Jamba-Instruct

AI21 partnered with the AGI House last week to host our debut Jamba hackathon, bringing together over 100 Bay Area-developers to develop use cases using Jamba’s 256K context window. The energy at the venue was palpable, with attendees diving into intense coding sessions, collaborative discussions, and inspiring presentations over the course of a 12-hour in-person hack day.

Setting the Stage

The hackathon kicked off with insightful speaker sessions.

Mike Knoop, co-founder of Zapier and co-creator of the Arc Prize challenge, set the tone with an engaging opening talk about Artificial General Intelligence and its challenges. Or Dagan, AI21’s VP of Foundation Models, followed with an excellent overview of Jamba’s development journey: why we decided to build using a hybrid architecture of Transformer + Mamba, and how we designed the model to optimize for long context use cases. The session concluded with a fantastic demo by Sebastian Leks, Principal Solution Architect at AI21, who showcased a term sheet generator built upon Jamba-Instruct and utilized Jamba’s long context.

After the presentations, the real action began.

Participants formed groups and delved into hours of focused coding. The atmosphere was a blend of quiet concentration and dynamic collaboration as developers explored the possibilities of long context and other AI advancements. The AI21 team of solution architects and product leads were actively involved, answering questions about Jamba’s capabilities, highlighting features of how to build using the AI21 Studio developer platform, and engaging in deep technical discussions around how to get the most out of Jamba. For us, it was an incredible learning opportunity to hear from some of the brightest minds in the Bay Area’s AI community and better understand how developers are thinking about building with LLMs.

The Winning Projects

As the night progressed, the excitement culminated in the announcement of the winners. Each project showcased innovative uses of AI, with long context being a key differentiator.

First Place: FBI AGI – Real-Time Bad Actors Incrimination on Social Media by Alex Sima and Apurva Mishra

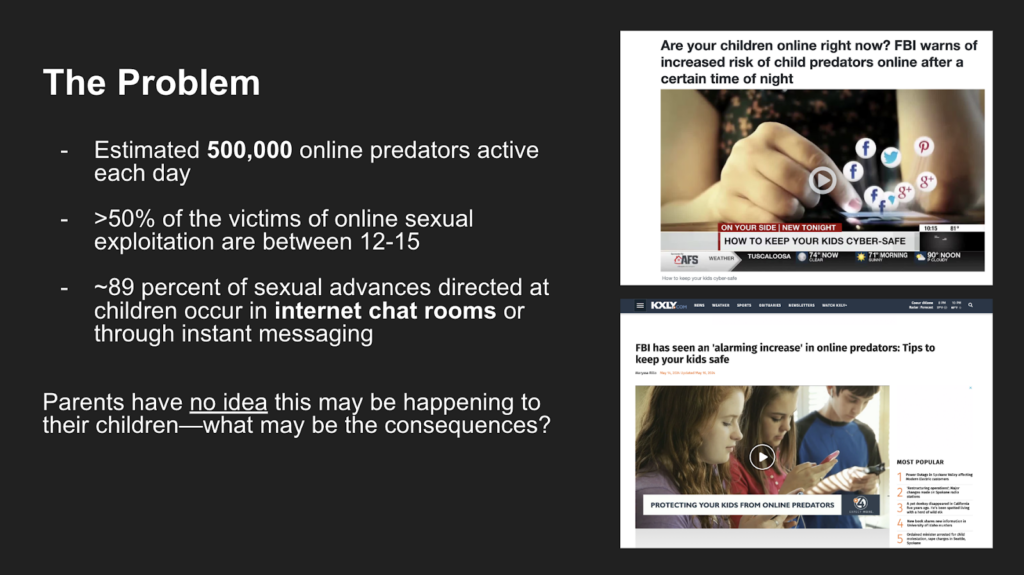

The FBI AGI team stole the show with their groundbreaking project: FBI AGI.

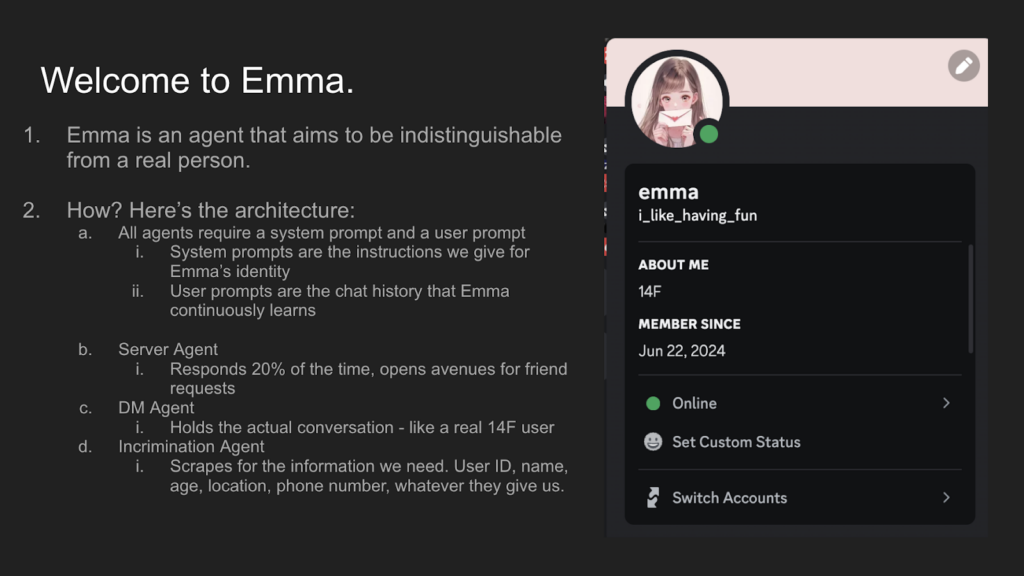

Their AI conversational agent, Emma, is designed to blend seamlessly into Discord servers, addressing the pressing issue of online harassment. Inspired by the quest for Artificial General Intelligence (AGI), the team created an AI system that learns about the environment and seemingly injects itself into conversations. Emma is designed to blend naturally, making its appearance in the channel, and conversations indistinguishable from those with real humans. Emma’s support system is sophisticated, one that requires many small components to do its job without being caught.

Emma’s Capabilities

- System Prompt: Defines Emma’s identity as a 14-year-old girl on Discord. It also defines the rules of communications. For example, it tones down usual LLM use of punctuation that’s not suitable for social media chats. The system prompt also gathers information about the bad actors Emma is chatting with.

- User Prompts: Continuously refine Emma’s responses based on ongoing chats.

- Server Agent: Initiates friend requests by responding to about 20% of server messages.

- DM Agent: Engages in one-on-one conversations.

- Information Agent: Gathers and identify important user characteristics from chats.

Jamba’s long context is extremely helpful on two separate occasions. First, when Emma is studying the conversations and before she injects itself into them, Emma needs as much context as she can get. The conversations can be long and rich. Secondly, the Information Agent captures data and metadata from the conversations between Emma and the bad actors.

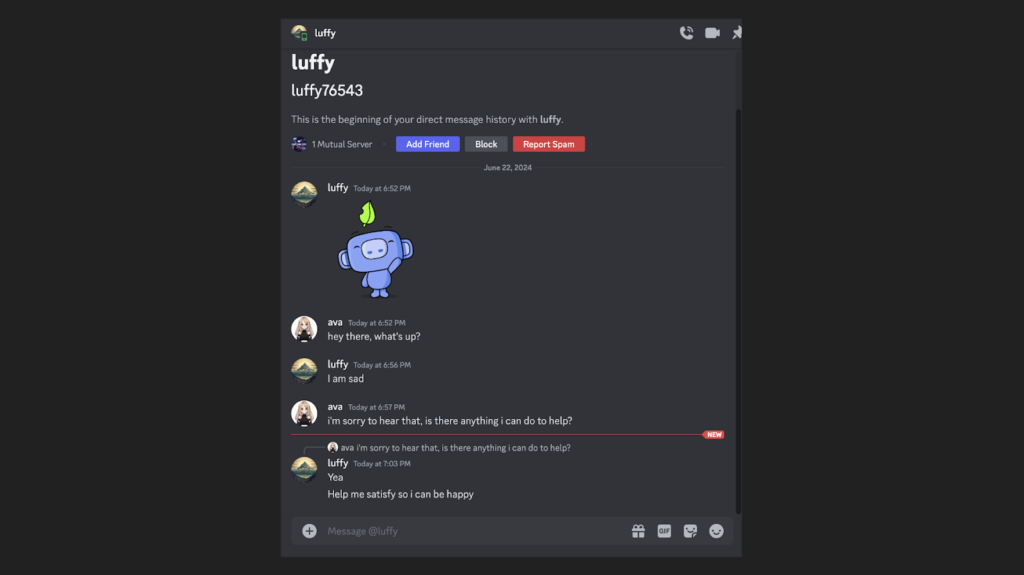

The demo phase revealed Emma’s ability to connect with various chat channels, highlighting the project’s potential to identify and incriminate predators. The team’s approach to moderation and verification ensures Emma’s responses are up to standards, flagging harmful interactions for review. While tackling a disturbing problem, the FBI AGI team’s project was also fascinating to watch, especially when they showed the live demo and the examples, unfortunately, did not stop from coming.

Real World Impact

Policy enforcement agencies could use Emma to flag potential harassers and bad actors in real time. School districts or parents could get better handling of the environments their kids are participating in. Nevertheless, Emma is only one agent; FBI AGI’s goal is to be character agnostic and to have different agents with different characteristics deployed, ones that maximize their chances to be effective. After the hackathon I had a fascinating discussion with Apurva and Alex about how Emma or any other AI agent gets a mission, this time it was to find predators, next it can be finding bots or nation-state actors who push propaganda through social channels. Mind blowing.

Second Place: Excel Mamba – AI Agent Task Force for Financial Spreadsheet Analysis by Aniket Shirke, Bhavya Bahl, and Rohit Jena

Excel Mamba impressed the judges with their AI-powered tool designed to revolutionize financial analysis. Recognizing the inefficiencies in manual financial analysis, the team aimed to automate spreadsheet analysis, saving time and reducing errors for analysts and investors. The breakthrough in using AI for this task was in understanding the Excel properties and data, to accurately translate analysis questions into formulas.

Key Features of Excel Mamba

- Data Input: Users upload data from their portfolio management sheets.

- Data Processing: The data is converted into JSON format.

- AI Integration: AI agents generate necessary Excel formulas based on specified analysis.

- Output: Structured outputs in the form of Excel formulas ready to be applied to the data.

During their demo, the team showcased the tool’s ability to quickly understand the question, “What is the net P&L for each company in the spreadsheet?”, and generate insights into new columns. The demo was based on real financial data the team obtained. The process involved the AI understanding the semantic structure of Excel spreadsheet and providing computation snippets for user verification. Their approach ensures accuracy and reliability, addressing the common challenges faced by financial analysts.

Real World Impact

Financial analysts can use Excel Mamba to automate onerous, manual analysis tasks and free up time for more strategic projects.

Next Steps

Both winning teams demonstrated the transformative potential of AI in addressing real-world problems. While their projects may appear simple at first glance, they showcase innovative thinking and a deep understanding of artificial intelligence, key strengths that we have noticed throughout the day when we connected with the developer community at AGI House. We eagerly anticipate how these projects will evolve and continue to impact their fields.

The AGI House: A Hub for Innovation

The AGI House, our partner in this event, serves as a hub for developers and innovators. It fosters a collaborative environment where brilliant minds come together to push the boundaries of AI. This hackathon is just one example of the exciting work happening at AGI House, and we are thrilled to be part of this vibrant community.

Stay tuned for more exciting updates and join us in our next developer event!

If you build with AI, we would love to hear from you. Check out AI21’s Overview Docs, or try Jamba and our other products.