Table of Contents

How Major Retailers Personalize the Buy with AI

Come mid-January there are two things the retail industry can be sure of:

1) About one in four consumers will have already quit their New Year’s Resolutions; and

2) The NRF Big Show will showcase how the latest technological innovations, such as LLM chatbots, will impact the retail industry.

Retail businesses are increasingly reliant on data, and lots of it. The industry thrives when it can rapidly identify trends, understand buyer personas and optimize inventories. Harnessing the power of Generative AI to process this data, analyze it and make decisions and recommendations based on it, will fundamentally change the way we do business.

The retail industry has fully embraced digital commerce and all its potential, finally catching up to the generations of consumers who barely know any other way. It is a fast-paced world in which displays change based on who’s seeing them; people discover, shop, and buy while at a stoplight; and customers are yours only for as long as it can take them to click the next link. When everything is moving so quickly, acting swiftly to become an early adopter of AI can be the difference between sold out collections and sending truckloads to TJ Maxx and Marshalls. The adoption of AI and retail chatbots opens up endless possibilities to develop personalized offers and deliver them with personalized messages. This will empower retailers to enhance user experiences, streamline operations and ultimately drive business growth. Fortune favors the bold.

You might wonder about the role of GenAI in retail. Thanks to its natural language processing capabilities, you can use LLMs (Large Language Models) to interact with your information systems through verbal communication. Think of the most knowledgeable, alert and articulate Salesperson that you know, add real-time access to inventory levels and customer purchase histories and no need for a coffee break. This is exactly what LLM chatbots can be for you.

Delivering tailored retail experiences with GenAI

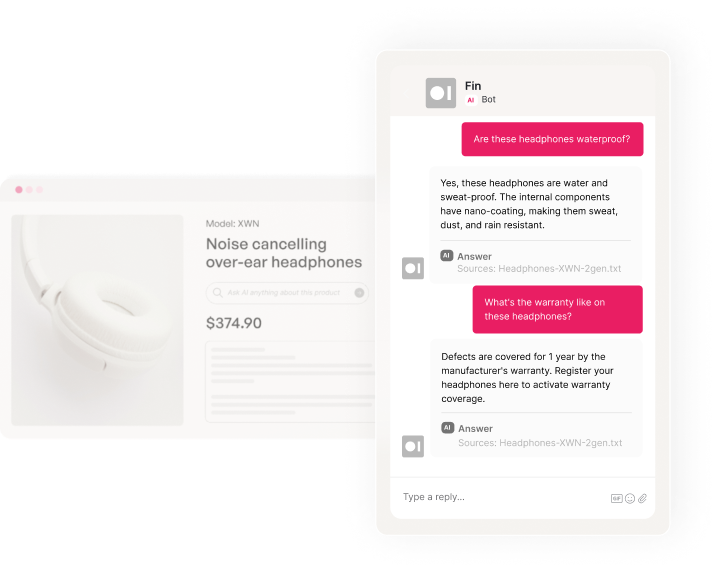

While chatbots are not new, the responses that pre-AI versions provided were often rigid, general and clearly scripted. More importantly, the logic was easily sidetracked by the use of unfamiliar slang or vernacular. With natural language capabilities, LLM chatbots have a better grasp of the context of the question and the flexibility to provide an informative and useful response in a much shorter time.

One of the most talked about applications for this is personalized customer support. Customers benefit from a humanized interaction, able to communicate freely and get to a resolution with much less friction and frustration. Another holy grail for retail chatbots is the Private Shopping Assistant. For those consumers who are much more comfortable with digital interactions, a humanized chat with an entity that is both discrete and also familiar with their preferences and past purchases is the perfect confidant for questions ranging from sizes and prices to availability and accessories.

Alternatively, LLMs can also assist retailers with more back-office tasks, such as preparing effective product descriptions. With practically infinite authoring resources, retailers can optimize the description for any scale of inventory and then duplicate them with targeted tweaks to catch the eye of each buyer persona.

Since retailers (like most organizations) don’t always have the technical resources to set up and train a Generative AI model from scratch, AI21 has developed Task Specific Models (TSMs). TSMs are designed and trained to excel at one specific job, one natural language capability. For example, the Semantic Search TSM allows users to freely express what they are looking for and receive results based on what they meant, not what they typed. The Contextual Answers TSM takes information that organizations feed into the system and uses it to provide focused, informative responses to questions that end-users submit. Its output is grounded on the retailer’s well-defined subject matter and incorporated into the Customer Experiences at a fraction of the time it would take to create an AI model from scratch and with much lower error rates.

There’s a simple way to implement GenAI

The vision of implementing a Generative AI solution in a working retail business can seem both fantastic and daunting, the ultimate “we’ll do it next year” project. With TSMs, you no longer have to wait, as they are faster to deploy, easier to customize and cheaper to implement and maintain. They do not require the vast, sometimes complex, amounts of data that general purpose AI models do– rather, they rely only on data sources that are trusted by the retailer. They do not need to support a broad range of query types, only the types that serve the use case, making it much easier to anticipate and secure the inputs. Their focused nature means that they are leaner to operate, with a much smaller footprint that requires fewer resources and leads to lower latency. They are purpose-built, equipped with various built-in customization and verification mechanisms that help ensure that outputs that are more accurate, reliable and grounded. The result is a simpler implementation, one that can be done by a developer and a single line of code.

The retail industry has realized the importance of achieving the levels of customization that consumers have come to expect. Task-Specific Models help deliver this ambitious goal by assisting retailers to harness the power of generative AI personalization with minimal effort. The experienced team at AI21 is available to provide tailored guidance and a dedicated support team to help you reach your goals. Whether you’re looking to automate and optimize unique product descriptions, enable self-service support and rapidly resolve customer issues or generate targeted marketing messages that speak to each buyer, Task-Specific Models offer the most practical path forward.

The era of conversational commerce is now

It is no longer outlandish to state that we are entering the age of AI, an era in which we use massive amounts of data to identify patterns, predict outcomes and make corresponding decisions in microseconds. Retail is a high-volume, high-touch industry, where most companies have made the digital transformation needed to generate that data. Feeding that data into generative AI models with natural language processing will result in an ultra-personalized, highly scalable user experience. Consumers will be able to naturally converse with your applications to ask questions, seek recommendations and make purchases. Staff will be able to study behaviors, optimize logistics and create finely tuned messaging. The only drawback has been the cost and the duration of setting it all up.

Task-Specific Models overcome this hurdle by offering a new paradigm for enterprises seeking to implement AI. With TSMs you can build applications such as LLM chatbots in a reliable, simple and cost-effective manner to solve real business challenges. Retailers can enjoy a faster time-to-value and, thanks to lowered costs, a clear ROI.

Don’t get left behind – AI is unlocking new levels of customization. Whether you’re looking to boost conversion rates, improve customer satisfaction, or create infinite scalability, our AI solutions make personalization effortless. Contact us to learn more.