Table of Contents

How Contextual Answers Transforms Customer Support

Delivering exceptional customer support is more challenging than ever before. Customers expect fast, comprehensive solutions to increasingly complex issues, putting immense pressure on support teams. Yet sifting through vast knowledge bases to find relevant answers is inefficient and wastes precious time.

AI21 Labs has identified gaps where Generative AI can create fast, tangible impact for businesses, starting with their customer support teams. ‘Contextual Answers’ is an AI system based on question-answering technology that allows support agents to search their company’s extensive knowledge bases to rapidly provide customers with accurate, personalized solutions. It can help businesses boost agent productivity, reduce handling times, and increase their first-call resolution rates – all the while enhancing customer satisfaction.

This article will explore how Contextual Answers integrates into existing workflows to boost productivity. You’ll learn how it empowers support teams to deliver the responsive, satisfying experiences customers demand in today’s highly competitive landscape.

The Growing Pains of Customer Support

Studies show that increasing customer retention by just 5% can boost profits by 25%-95%. And the number of repurchases and renewals also increases by 82% when the customer receives excellent and prompt service. Despite the fact that high-quality customer service boosts profits and loyalty, achieving it is a formidable challenge.

Costs

One major pain point is the substantial cost of staffing large, round-the-clock support teams to meet today’s expectations of instant, reliable responses. Even then, delivering personalized, researched answers quickly strains resources.

Effectiveness

Even large teams struggle resolving queries quickly and correctly. Inquiries often require extensive research and tailored responses, lengthening wait times and frustrating customers.

Self-Service

Despite readily available website information, users often escalate straightforward questions to agents. This overburdens staff and disappoints customers. Improving accessibility is key for satisfaction and efficiency.

Using Contextual Answers to Overcome Support Challenges

What is ‘Contextual Answers’?

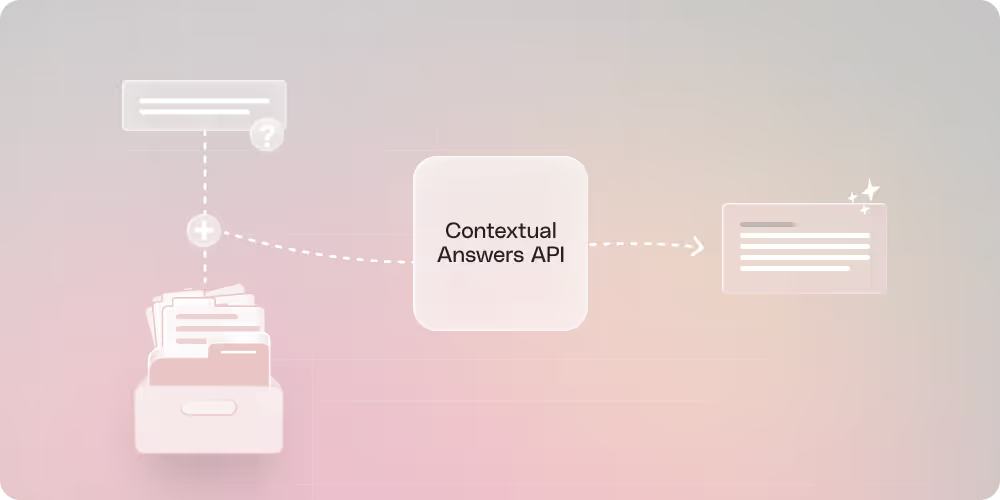

Contextual Answers is a task-specific Generative AI system, based on large language models (LLMs), to provide accurate responses to questions based on a company’s data. It lets users ask natural language questions and receives answers grounded in uploaded documents and information fed into the LLM. This prevents fabricated responses common with AI, known as AI hallucinations.

At AI21 Labs, we have built a comprehensive end-to-end API solution which allows companies to effortlessly upload their required documents and allow their employees or customers to ask inquiries using natural language. The responses provided rely solely on the inputted information. In addition, the Contextual Answers tool provides a reference to the source which the answer was taken from, ensuring an unparalleled level of credibility.

How Contextual Answers Can Improve Customer Support

There are two main ways to use Contextual Answers for customer support:

- Improve Efficiency

The Contextual Answers system gives customer support agents quick access to relevant information. This makes it faster for them to find answers when customers ask questions, as it significantly cuts down research time. This results in shorter wait times for customers – a significant metric for customer satisfaction.

- Automate Responses

The Contextual Answers system can be built into the company’s website as a dynamic chatbot or refined search bar. This lets it directly answer common customer questions instantly and accurately. The company then doesn’t need to handle questions already answered on its site. This focuses support efforts on complex issues and improves team efficiency.

Companies can use one or both approaches. But the Contextual Answers system reduces the need for large support teams either way, while improving customer satisfaction. The ultimate outcome includes a two-fold benefit: a significant reduction in costs of support representatives, along with an elevation in company profits, as a result of the ongoing retention of satisfied customers.

How to Implement Contextual Answers in Your Company

1. Make a Plan

The first step is to make a plan for what you want to achieve. Decide if Contextual Answers will be used internally, externally, or both. At this point your company will also need to choose an LLM provider, exploring factors such as price, the latency of the LLMs, the throughput (how many questions per user), API quality and ease of use.

You’ll also have to decide if you need multiple languages. If so, you’ll need to add a translation step to the process, and pick which languages to include.

For example, an online bank approached us with the need to improve their customer support.

Being primarily online, one of their main features is having available support 24/7, and Contextual Answers was a perfect fit for this use case.

During the initial planning phase, this digital bank determined that, for their specific use case, an externally-facing customer solution best suited their needs. A user-friendly chatbot was developed to respond to facilitate customer inquiries and provide relevant answers. Fast, reliable responses grounded in the company’s data were the priority.

It was equally important for them to maintain consistency in tone, format, and response length during customer interactions. They also included a translation component into the process, recognizing the multilingual nature of their clientele.

During the planning phase, these decisions were used as guides to streamline our processes and align them with the bank’s specifications.

2. Curate and Label the Data

Once your company has its plan in place, relevant data must be compiled and curated.

If the system is for external use, and customers will be interacting with it, the required data should also be publicly available to customers. For internal use by the support team, the company can also incorporate non-public policies and guidelines.

After the data has been collected, it needs to be labeled accordingly.

For instance, a SaaS company might have premium, gold, and platinum tiers of customers. These tiers use different product features and need different answers to their questions. It’s important for the company to tag each data item with the tier to which it belongs in order to provide the right answers. So when a customer asks a question, Contextual Answers will know which data to extract the information from, and customers will get answers that match their product features and tier.

You can even go further, and personalize the answer according to the customer’s profile, giving them a customized and accurate response.

In the case of the digital bank that we worked with, they created a database of extensive support documents that included all of the information that they were authorized to provide to their customers. This database was the LLM’s single source of truth. It was the context used to answer customer questions, and unanswerable questions were escalated to human representatives. They also aggregated previously asked questions with ideal responses to refine answer formats.

This shows how thoughtful data gathering, organization and labeling ensures Contextual Answers has the appropriate contextual information to deliver precise, personalized responses. This strengthens accuracy while mirroring company guidelines.

3. Develop & Deploy Contextual Answers

In order for Contextual Answers to work, the company must synchronize the relevant, curated documents with their chosen AI21 large language model. At this stage, the LLM provider will meticulously customize the Contextual Answers tool to precisely meet the company’s needs.

When using Contextual Answers for customer support, a company can adjust the tone of communication based on individual customer profiles. A customer who is older may need a more sophisticated response, while someone who is younger may need a more casual response. As well as extracting personalized information, the LLM can extract answers from distinct document sets for each query.

Additionally, for a retail company, Contextual Answers can pull from different product manuals and catalogs to find answers based on what the customer purchased. By customizing which documents are searched, it provides responses tailored to each customer’s specific items.

Once the product is developed to meet the company’s specifications, it is deployed into their operational framework. In the case of the digital bank refined AI chatbot, seamless integration within the bank’s application was executed to guarantee constant accessibility for the users.

Whether you need to deploy a solution locally or globally, AI21 Labs offers an easy plug-and-play option.

4. Continuous Evaluation

In this final phase of the process, it remains vital to maintain a continuous evaluation of the Contextual Answers’ performance. thoroughly checking the user experience, whether for employees, customers, or both. An essential component of this evaluation involves validating the accuracy of the generated answers, and ensuring their precision.

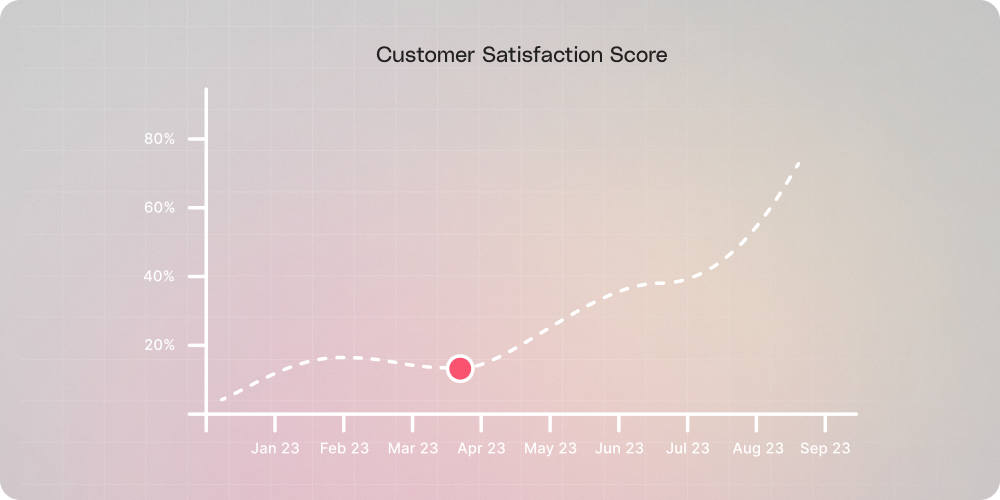

Ongoing monitoring of key metrics is also important. This includes an in-depth analysis of key performance indicators, such as customer satisfaction metrics, retention rates, cost efficiencies, and revenue maximization. This comprehensive evaluation framework provides insights into the extent to which Contextual Answers genuinely contributes to the company’s expansion trajectory, while also revealing opportunities for improvement.

In the case of the digital bank, we created a grading system that ranked each chatbot answer from 1-5, whereas 1 was unacceptable, and 5 was ideal. This training ensured acceptable responses aligned with the bank’s requirements. The bank will need to continue scoring its chatbot answers to maintain quality.

As a result of maintaining the chatbot’s high quality, the bank has seen some positive results, including better customer satisfaction and a smaller customer support team, which reduces costs.

Conclusion

As customers demand ever-faster and more personalized support, Contextual Answers represents a powerful opportunity. This AI-powered solution streamlines workflows while automating repetitive tasks. Support agents can rapidly address inquiries by tapping into collective knowledge. Meanwhile, self-service options reassure customers their needs are heard.

For organizations seeking to strengthen support and boost loyalty, Contextual Answers is a strategic investment. Don’t leave gains on the table – contact our experts today to explore how Contextual Answers can help your business thrive.